With the use of a new AI tool from Runway’s Gen-2 Model, users can now create quick films with just one image. Initially, Runway Motion Brush is a free product to use.

Generative artificial intelligence (AI) has become the hottest topic in the rapidly evolving field of technology. It is changing activities such as calculating difficult equations quickly and generating visuals based on text suggestions.

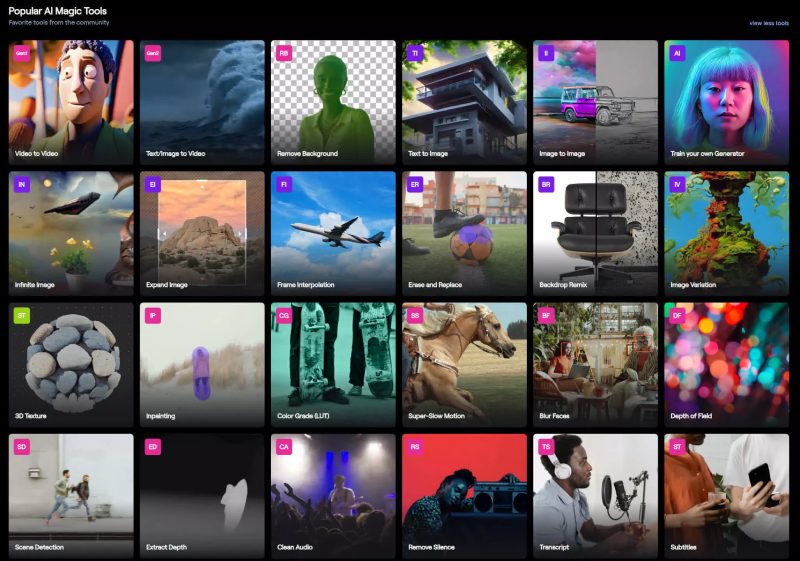

One of the leading companies in this space is Runway, a generative AI platform designed specifically for multimedia production. With just a brief instruction, this program can effortlessly create sounds, photos, films, and 3D buildings. What’s more, it may be used for free to begin started.

Runway may also be used to create movies from any image, even ones created using models like Midjourney, by utilizing tools like Runway Motion Brush.

Runway Gen-2, a multimodal AI system that can produce photos, movies, and videos with text, is the company’s most recent offering. Even more conveniently, Runway has an iOS app that lets consumers create multimedia material on their smartphones.

Users can make new videos with simple text prompts using Runway Gen-2. Users with free accounts can create four-second videos that can be shared and downloaded on any device, but they will have a watermark.

Five credits are used for every second that a video is generated; free users get 500 credits. If you’re looking for more advanced features and tools, a $12 monthly membership plan with greater customization possibilities for the output is offered.

Simultaneously, Stability AI unveiled Stable Video Diffusion, a state-of-the-art AI research instrument capable of converting any still image into a brief video. Using image-to-video approaches, an open-weights sample of two AI models can run locally on computers equipped with Nvidia GPUs.

With the release of Stable Diffusion, an open-weights image synthesis model, last year, stability AI acquired popularity and sparked an enthusiast group that expanded the technique with their own modifications.

Stability now wants to use AI video synthesis to replicate this success. The two models that make up Stable Video Diffusion at the moment are “SVD” and “SVD-XT,” which generate video synthesis at 14 and 25 frames, respectively. The versions have different operating speeds and can produce brief MP4 video clips with a resolution of 576×1024, usually lasting two to four seconds.

Stability emphasizes that the model is currently in its early phases and should only be used for study.

The business notes that although they are continually updating the models and seeking comments on quality and safety, the current stage is not appropriate for commercial or real-world applications. Future iterations of the model will benefit greatly from the insights and comments that have been received.

Topics #AI #Artificial intelligence #Gen-2 Model #image #New AI #New Artificial Intelligence #news #Runway #Runways Gen-2 Model #Short Videos